The Epistemic Gap: Why Standard XAI Fails in Legal Reasoning

The core problem is that AI explanations and legal justifications operate on different epistemic planes. AI provides technical traces of decision-making, while law demands structured, precedent-driven justification. Standard XAI techniques attention maps and counterfactuals fail to bridge this gap.

Attention Maps and Legal Hierarchies

Attention heatmaps highlight which text segments most influenced a model’s output. In legal NLP, this might show weight on statutes, precedents, or facts. But such surface-level focus ignores the hierarchical depth of legal reasoning, where the ratio decidendi matters more than phrase occurrence. Attention explanations risk creating an illusion of understanding, as they show statistical correlations rather than the layered authority structure of law. Since law derives validity from a hierarchy (statutes → precedents → principles), flat attention weights cannot meet the standard of legal justification.

Counterfactuals and Discontinuous Legal Rules

Counterfactuals ask, “what if X were different?” They are helpful in exploring liability (e.g., intent as negligence vs. recklessness) but misaligned with law’s discontinuous rules: a small change can invalidate an entire framework, producing non-linear shifts. Simple counterfactuals may be technically accurate yet legally meaningless. Moreover, psychological research shows jurors’ reasoning can be biased by irrelevant, vivid counterfactuals (e.g., an “unusual” bicyclist route), introducing distortions into legal judgment. Thus, counterfactuals fail both technically (non-continuity) and psychologically (bias induction).

Technical Explanation vs. Legal Justification

A key distinction exists between AI explanations (causal understanding of outputs) and legal explanations (reasoned justification of authority). Courts require legally sufficient reasoning, not mere transparency of model mechanics. A “common law of XAI” will likely evolve, defining sufficiency case by case. Importantly, the legal system does not need AI to “think like a lawyer,” but to “explain itself to a lawyer” in justificatory terms. This reframes the challenge as one of knowledge representation and interface design: AI must translate its correlational outputs into coherent, legally valid chains of reasoning comprehensible to legal professionals and decision-subjects.

A Path Forward: Designing XAI for Structured Legal Logic

To overcome current XAI limits, future systems must align with legal reasoning’s structured, hierarchical logic. A hybrid architecture combining formal argumentation frameworks with LLM-based narrative generation offers a path forward.

Argumentation-Based XAI

Formal argumentation frameworks shift the focus from feature attribution to reasoning structure. They model arguments as graphs of support/attack relations, explaining outcomes as chains of arguments prevailing over counterarguments. For example: A1 (“Contract invalid due to missing signatures”) attacks A2 (“Valid due to verbal agreement”); absent stronger support for A2, the contract is invalid. This approach directly addresses legal explanation needs: resolving conflicts of norms, applying rules to facts, and justifying interpretive choices. Frameworks like ASPIC+ formalize such reasoning, producing transparent, defensible “why” explanations that mirror adversarial legal practice—going beyond simplistic “what happened.”

LLMs for Narrative Explanations

Formal frameworks ensure structure but lack natural readability. Large Language Models (LLMs) can bridge this by translating structured logic into coherent, human-centric narratives. Studies show LLMs can apply doctrines like the rule against surplusage by detecting its logic in opinions even when unnamed, demonstrating their capacity for subtle legal analysis. In a hybrid system, the argumentation core provides the verified reasoning chain, while the LLM serves as a “legal scribe,” generating accessible memos or judicial-style explanations. This combines symbolic transparency with neural narrative fluency. Crucially, human oversight is needed to prevent LLM hallucinations (e.g., fabricated case law). Thus, LLMs should assist in explanation, not act as the source of legal truth.

The Regulatory Imperative: Navigating GDPR and the EU AI Act

Legal AI is shaped by GDPR and the EU AI Act, which impose complementary duties of transparency and explainability.

GDPR and the “Right to Explanation”

Scholars debate whether GDPR creates a binding “right to explanation.” Still, Articles 13–15 and Recital 71 establish a de facto right to “meaningful information about the logic involved” in automated decisions with legal or similarly significant effect (e.g., bail, sentencing, loan denial). Key nuance: only “solely automated” decisions—those without human intervention—are covered. A human’s discretionary review removes the classification, even if superficial. This loophole enables nominal compliance while undermining safeguards. France’s Digital Republic Act addresses this gap by explicitly covering decision-support systems.

EU AI Act: Risk and Systemic Transparency

The AI Act applies a risk-based framework: unacceptable, high, limited, and minimal risk. Administration of justice is explicitly high-risk. Providers of High-Risk AI Systems (HRAIS) must meet Article 13 obligations: systems must be designed for user comprehension, provide clear “instructions for use,” and ensure effective human oversight. A public database for HRAIS adds systemic transparency, moving beyond individual rights toward public accountability.

The following table provides a comparative analysis of these two crucial European legal frameworks:

Legally-Informed XAI

Different stakeholders require tailored explanations:

- Decision-subjects (e.g., defendants) need legally actionable explanations for challenge.

- Judges/decision-makers need legally informative justifications tied to principles and precedents.

- Developers/regulators need technical transparency to detect bias or audit compliance.Thus, explanation design must ask “who needs what kind of explanation, and for what legal purpose?” rather than assume one-size-fits-all.

The Practical Paradox: Transparency vs. Confidentiality

Explanations must be transparent but risk exposing sensitive data, privilege, or proprietary information.

GenAI and Privilege Risks

Use of public Generative AI (GenAI) in legal practice threatens attorney-client privilege. The ABA Formal Opinion 512 stresses lawyers’ duties of technological competence, output verification, and confidentiality. Attorneys must not disclose client data to GenAI unless confidentiality is guaranteed; informed consent may be required for self-learning tools. Privilege depends on a reasonable expectation of confidentiality. Inputting client data into public models like ChatGPT risks data retention, reuse for training, or exposure via shareable links, undermining confidentiality and creating discoverable “records.” Safeguarding privilege thus requires strict controls and proactive compliance strategies.

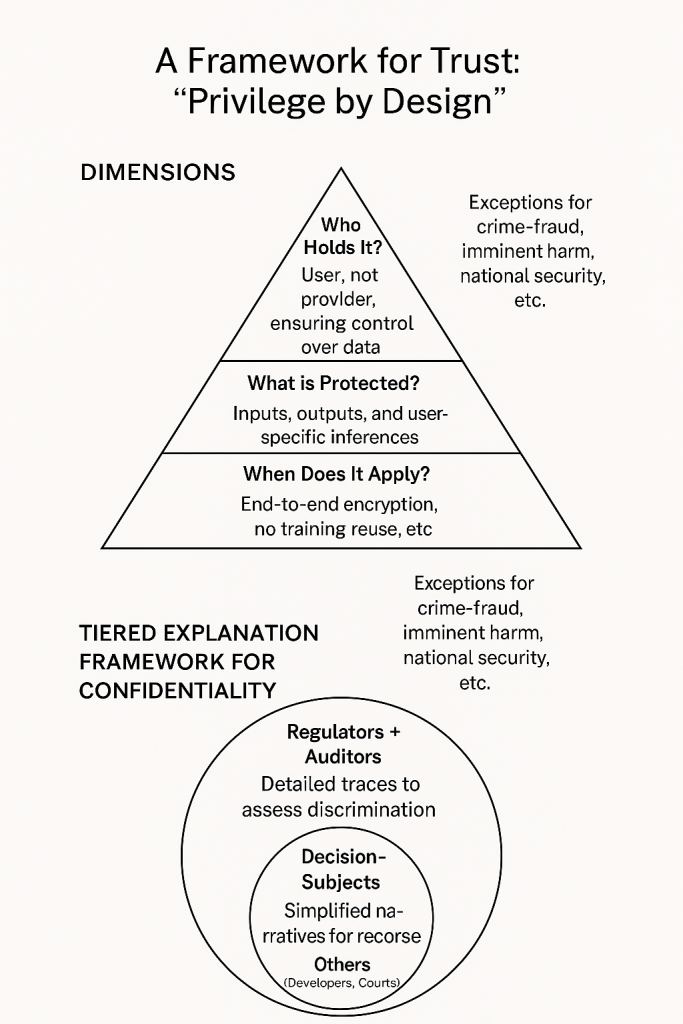

A Framework for Trust: “Privilege by Design”

To address risks to confidentiality, the concept of AI privilege or “privilege by design” has been proposed as a sui generis legal framework recognizing a new confidential relationship between humans and intelligent systems. Privilege attaches only if providers meet defined technical and organizational safeguards, creating incentives for ethical AI design.

Three Dimensions:

Exceptions apply for overriding public interests (crime-fraud, imminent harm, national security).

Tiered Explanation Framework: To resolve the transparency–confidentiality paradox, a tiered governance model provides stakeholder-specific explanations:

- Regulators/auditors: detailed, technical outputs (e.g., raw argumentation framework traces) to assess bias or discrimination.

- Decision-subjects: simplified, legally actionable narratives (e.g., LLM-generated memos) enabling contestation or recourse.

- Others (e.g., developers, courts): tailored levels of access depending on role.

Analogous to AI export controls or AI talent classifications, this model ensures “just enough” disclosure for accountability while protecting proprietary systems and sensitive client data.

References

Aabis Islam is a student pursuing a BA LLB at National Law University, Delhi. With a strong interest in AI Law, Aabis is passionate about exploring the intersection of artificial intelligence and legal frameworks. Dedicated to understanding the implications of AI in various legal contexts, Aabis is keen on investigating the advancements in AI technologies and their practical applications in the legal field.